The need for an instant response

The fundamental reason for Multi-access Edge Computing (MEC) is latency. With more and more devices being connected to 5G mobile networks there is an increasing demand and expectation for near instantaneous responsiveness. Therefore even ‘Low latency’ on a network is no longer considered to be sufficient for many applications to perform.

Examples of those that currently require a near instantaneous response are wide and varied including remote medicine, autonomous vehicles/connected cars, Industry 4.0, gaming and virtual reality, and this list will keep growing. Therefore this is where a network’s inherent latency could become a major stumbling block for those applications that are relying on it to perform as the user needs and expects it to.

The trouble with latency

A major contributor to latency is the distance between the end points. The further away the request is from the service, the greater the round trip delay. The way to reduce latency is to bring the application closer to the user. However, this is not easy. Traditional data centers are usually located hundreds/thousands of miles away where land/electricity/water is cheap, often in remote colder geographies where cooling is not such a big (and by big we mean expensive) issue. These traditional remote data centers are unsuitable and not conducive to a high quality user experience. Delays of >100ms are not uncommon in this traditional infrastructure whereas some of the applications mentioned above require latency an order of magnitude less than that, so sub-10ms.

MEC - The Solution

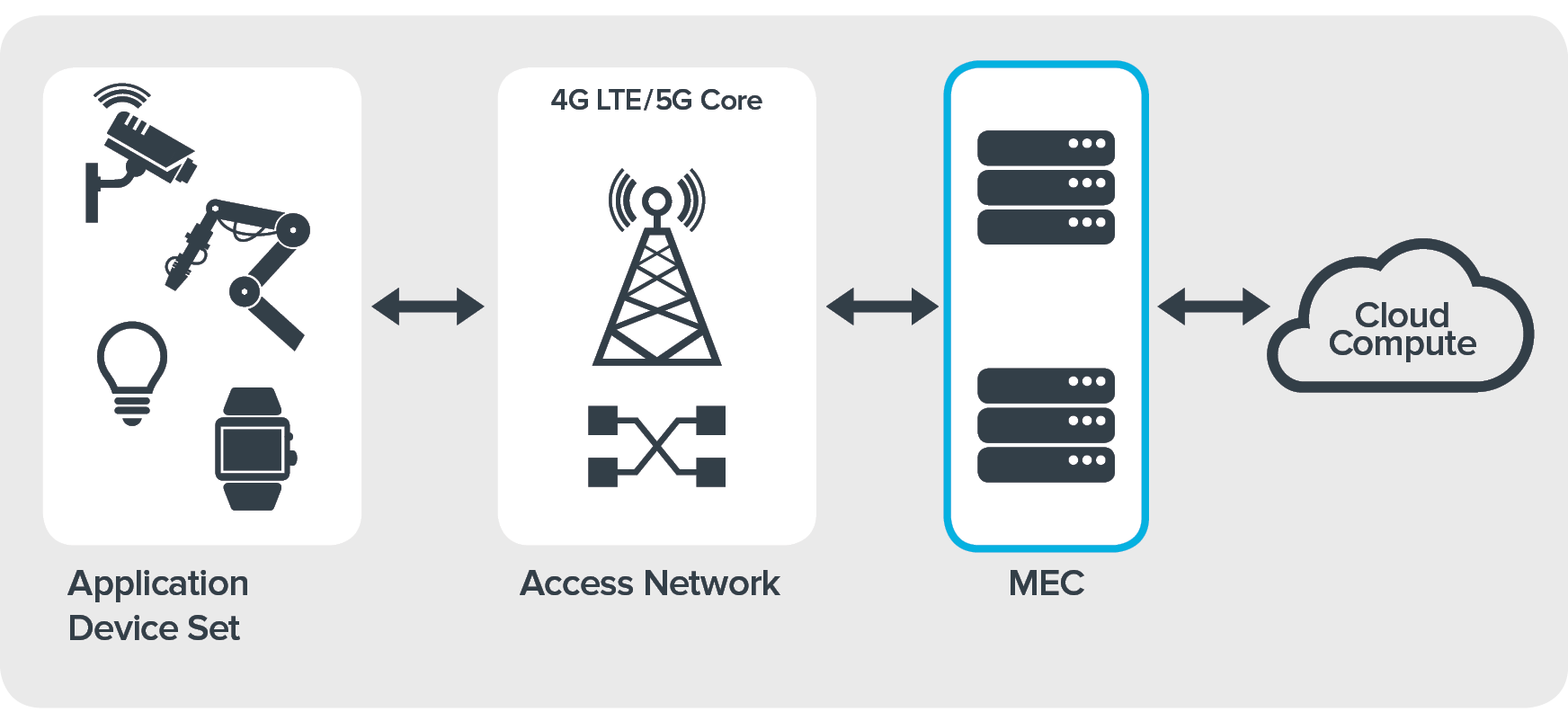

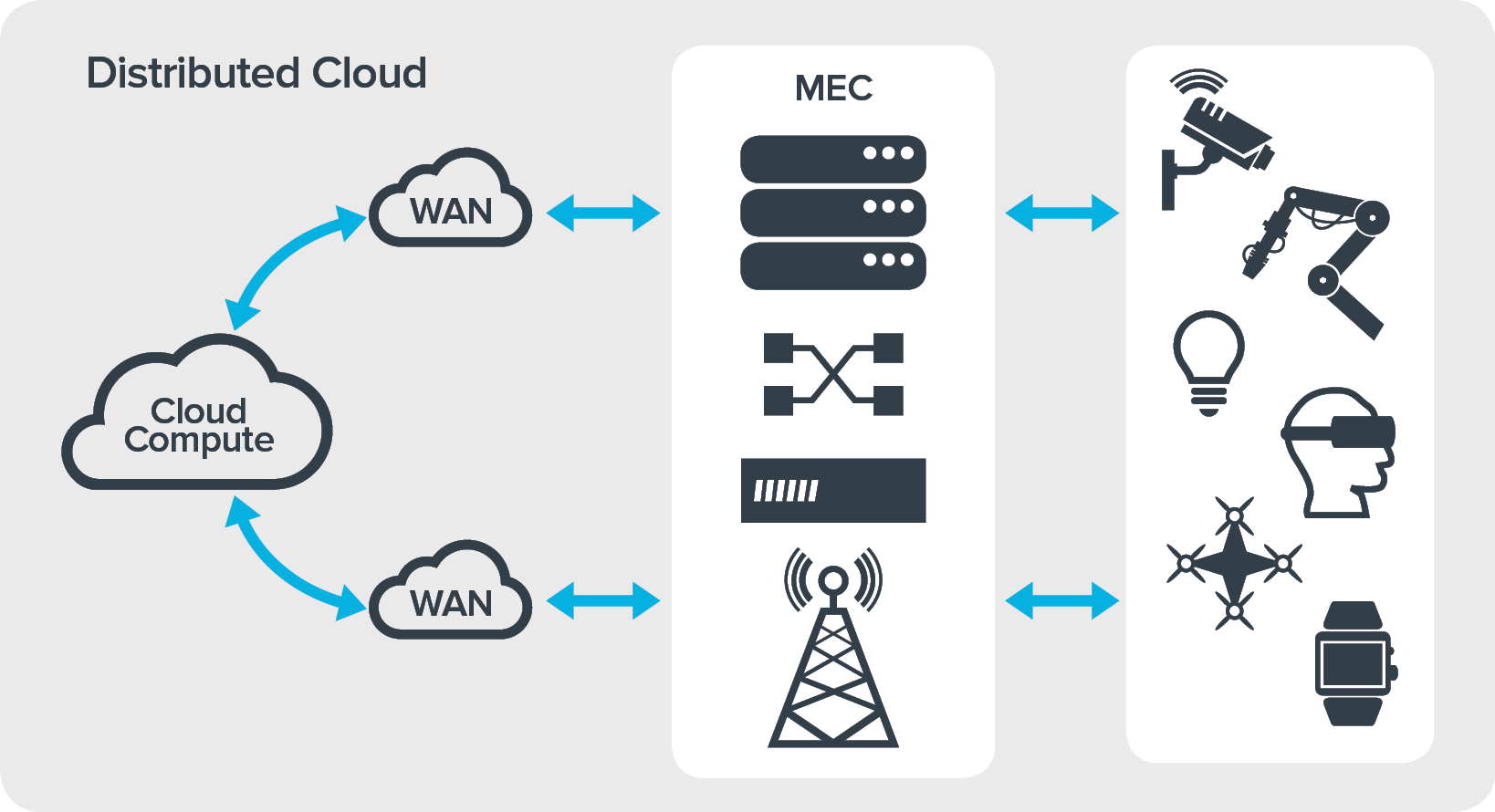

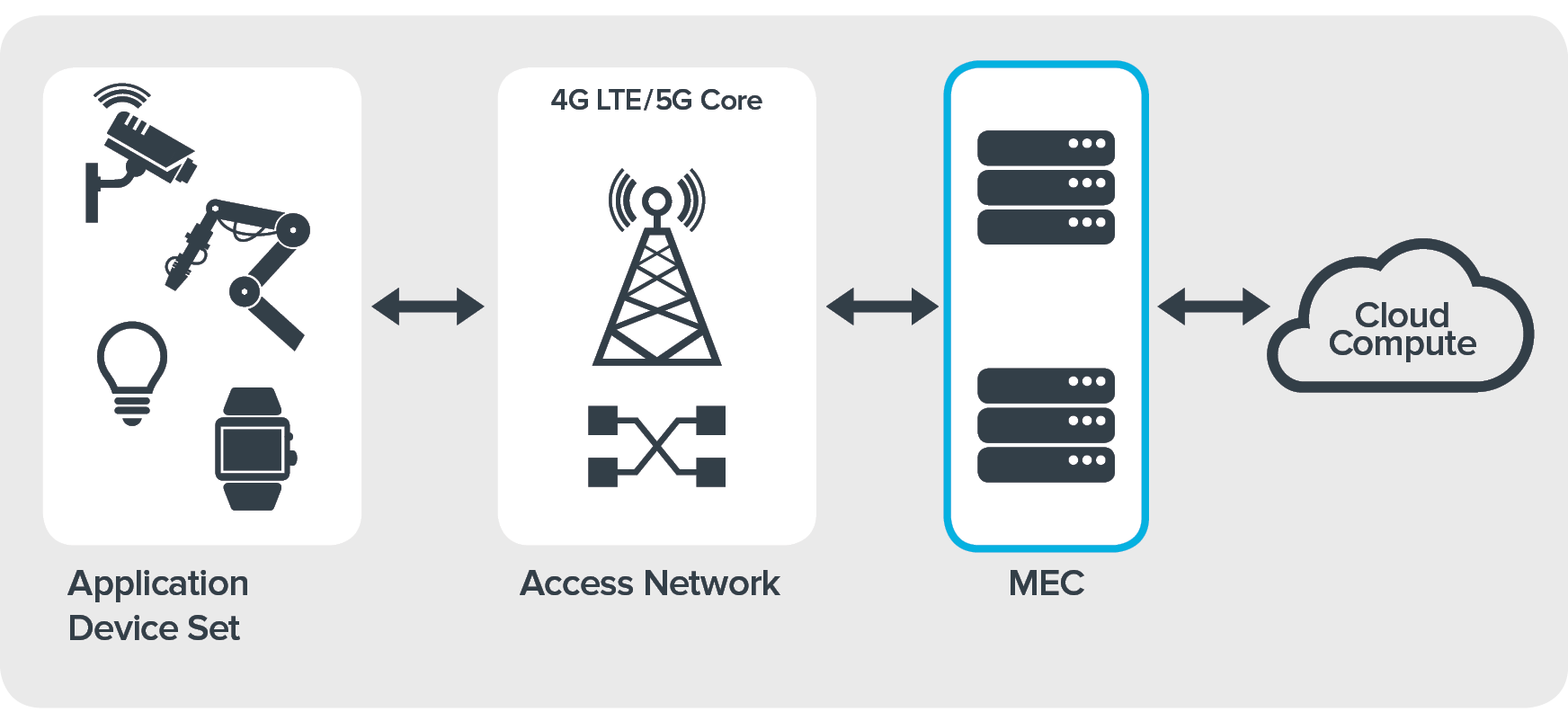

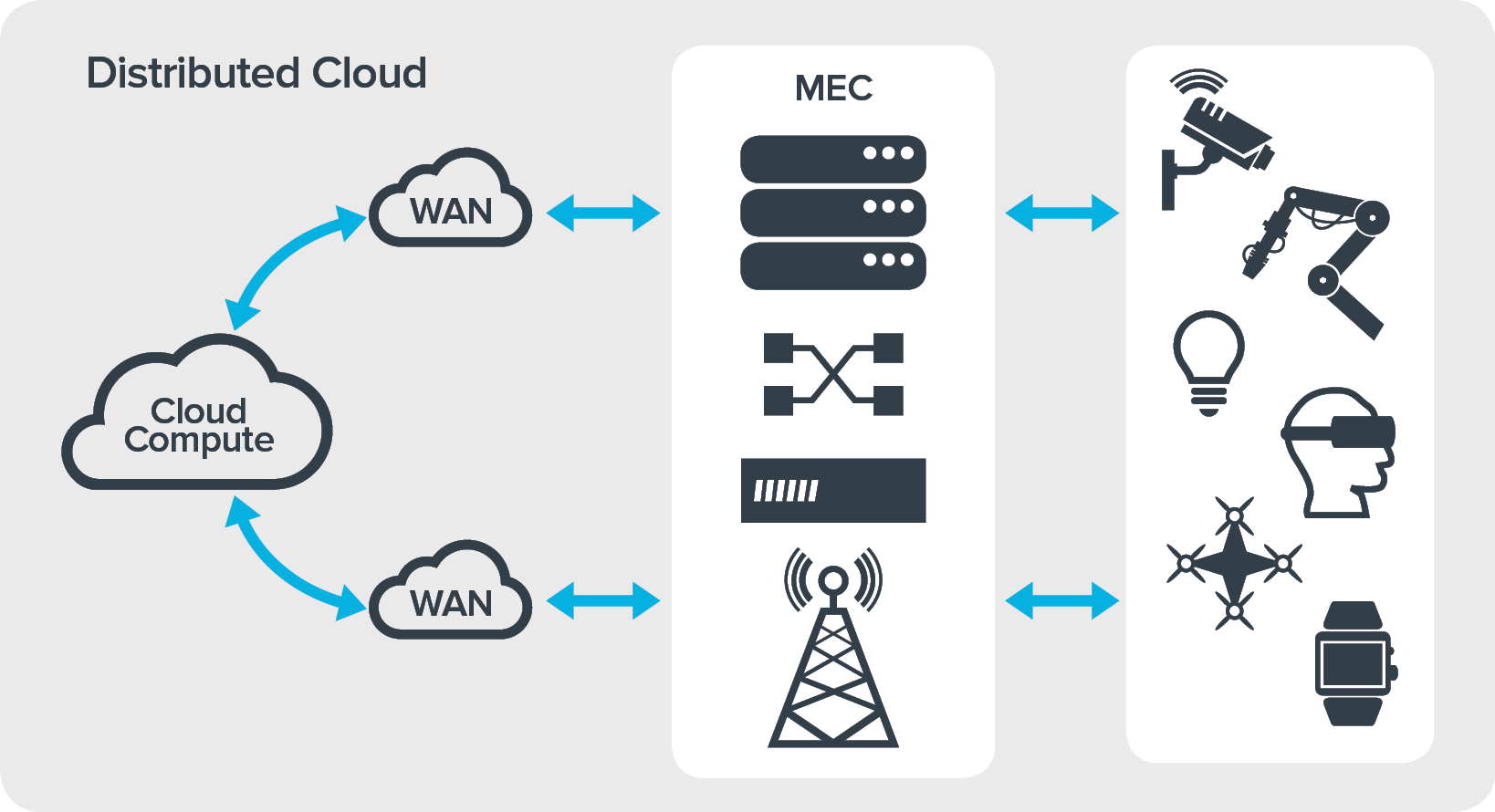

So the solution to the latency problem is MEC. MEC runs applications and related processing tasks closer to the customer thus reducing network congestion and latency so that applications perform to the speed at which they are required.

Edge computing architectures bring the data center closer to the end user or closer to where the data is generated. Data is processed and stored at the edge and only key info is transmitted to the central cloud for backend services support.

MEC is not an alternative to the cloud; it places key data and parts of the application closer to the end-user. The resultant effect is that MEC can reduce round trip delay, speed up processing and preserve bandwidth on a customer’s existing network enabling the instant response required.

Driven by the emergence of 5G and the vision of the Internet of Things, it is safe to say that MEC is here to stay. However as new technologies and applications continue to rely on it, complexity and the opportunity for performance error also increases. So in order to meet the ‘instantaneous’ challenge getting the architecture right, understanding what can and can’t be deployed at the edge and optimizing QoS parameters before deployment is more important than ever.

In our next blog we will look at testing a MEC Solution and what you can do before deployment to identify the optimal solution for your applications.

Related product: Calnex SNE

Peter Whitten

Business Development Manager

|

The need for an instant reponse The fundamental reason for Multi-access Edge Computing (MEC) is latency. With more and more devices being connected to 5G mobile networks there is an increasing demand and expectation for near instantaneous responsiveness. Therefore even ‘Low latency’ on a network is no longer considered to be sufficient for many applications to perform.

Examples of those that currently require a near instantaneous response are wide and varied including remote medicine, autonomous vehicles/connected cars, Industry 4.0, gaming and virtual reality, and this list will keep growing. Therefore this is where a network’s inherent latency could become a major stumbling block for those applications that are relying on it to perform as the user needs and expects it to. The trouble with latency A major contributor to latency is the distance between the end points. The further away the request is from the service, the greater the round trip delay. The way to reduce latency is to bring the application closer to the user. However, this is not easy. Traditional data centers are usually located hundreds/thousands of miles away where land/electricity/water is cheap, often in remote colder geographies where cooling is not such a big (and by big we mean expensive) issue. These traditional remote data centers are unsuitable and not conducive to a high quality user experience. Delays of >100ms are not uncommon in this traditional infrastructure whereas some of the applications mentioned above require latency an order of magnitude less than that, so sub-10ms. MEC - The Solution So the solution to the latency problem is MEC. |

MEC runs applications and related processing tasks closer to the customer thus reducing network congestion and latency so that applications perform to the speed at which they are required. Edge computing architectures bring the data center closer to the end user or closer to where the data is generated. Data is processed and stored at the edge and only key info is transmitted to the central cloud for backend services support. MEC is not an alternative to the cloud; it places key data and parts of the application closer to the end-user. The resultant effect is that MEC can reduce round trip delay, speed up processing and preserve bandwidth on a customer’s existing network enabling the instant response required. Driven by the emergence of 5G and the vision of the Internet of Things, it is safe to say that MEC is here to stay. However as new technologies and applications continue to rely on it, complexity and the opportunity for performance error also increases. So in order to meet the ‘instantaneous’ challenge getting the architecture right, understanding what can and can’t be deployed at the edge and optimizing QoS parameters before deployment is more important than ever.

In our next blog we will look at testing a MEC Solution and what you can do before deployment to identify the optimal solution for your applications. Related product: Calnex SNE Peter Whitten |