Jitter has been around for as long as the telecommunications industry has been trying to shift bits and bytes from A to B. Jitter is not cool, it’s unloved and nobody wants it and just like the bore at an office party it won’t go away. Now, just to be clear, we are talking about physical layer jitter here i.e. the ability of clock recovery circuits to function correctly and to determine if the data is a one or a zero or the closure of an eye diagram in the time domain. If you are expecting to hear about packets or packet jitter then this blog is not for you!

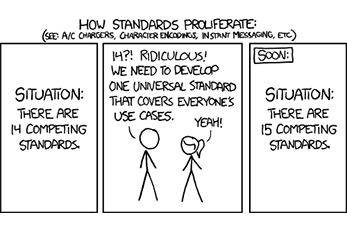

The higher speed circuitry, required for 100GbE, makes jitter a much bigger challenge than it was in the past. It’s much more difficult to have clean, open eye diagrams free of noise at 25GHz clock speeds. This is specified in IEEE 802.3. However, when the Ethernet clock is also used as part of the synchronization chain (i.e. Synchronous Ethernet, or SyncE), then there is another jitter standard which also has to be met, ITU-T G.8262. This places limits on jitter at much lower frequencies to ensure jitter doesn’t propagate down the synchronization chain.

G.8262 specifies that a SyncE clock must not generate more than 1.2UI pk-pk jitter measured over the 20kHz to 200MHz frequency range. This is hard enough, but the sting in the tail is the jitter tolerance limit – the amount of jitter a clock must tolerate on its input and still function correctly. Jitter tolerance is defined in the form of a mask from 10Hz to 100kHz, with as much as 6445 UI of jitter (250ns) at 10Hz.

Much of the industry focus has been on meeting the Jitter Generation requirements but, in reality, experience shows that much of the equipment out there can struggle to meet the tight Jitter Tolerance limits; especially at the top end of the mask at frequencies around 100kHz. If I was concerned about the quality of the equipment I was about to approve that’s where I’d look. Just saying.